Problem with direct mapping is if 0x00, 0x40 & 0x80 are repeatedly used, then its waste of storing it in the cache. To solve this issue set associative has come.

Here address 0x00 will be there in either "cache way 0" or "cache way 1" but not both. Here index is used to select a particular set(Refer 1st figure).

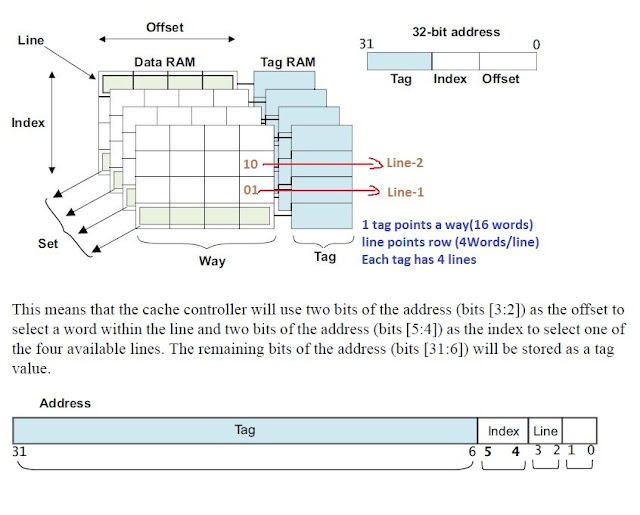

Real Example:-

Cache Size = 32KB(32768 bytes)

4 Ways associative

So,

No .of ways = 32KB/4 = 8KB ways

No. of bytes in each line = 32

No. of line = 8KB/32 = 256lines. So we need 8bits to represent a line.

Cache controller:-

- A HW block that has the task of managing the cache memory, in a way that is (largely) invisible to the program.

- It takes read and write memory requests from the core and performs the necessary actions to the cache memory or the external memory.

- When it receives a request from the core it must check to see whether the requested address is to be found in the cache. This is known as a cache look-up.

- If requested INSTR/data are not there in cache, then it is a cache miss. Then request passed to L2.

- Once data/instr is found Cache linefill will happen.

Virtual and physical tags and indexes:-

VIVT ==> Bad

Pr will use VA to provide both the index and tag values.

Advantage:-

Core can do a cache look-up without the need for a VA to PA translation.

Disadvantage:-

The drawback is that changing the virtual to physical mappings in the system, means that the cache must first be cleaned and invalidated, and this leads to significant performance impact.

VIPT ==> Good (ARM follows this)

Advantage:-

Physical tagging scheme is that changes in virtual to physical mappings do not now require the cache to be invalidated. Bcoz still cache can hold same PA, VA may vary.

Using a virtual index, cache hardware can read the tag value from

the appropriate line in each way in parallel without actually performing the virtual to physical address translation, giving a fast cache response.

PIPT:-

For a 4-way set associative 32KB or 64KB cache, bits [12] and [13] of the address are required to select the index.

If 4KB pages are used in the MMU, bits [13:12] of the virtual address might not be equal to bits [13:12] of the physical address.

Solution is PIPT.

Cache policies:-

Allocation policyRead allocate ==> Policy allocates a cache line only on a read.

write allocate ==> Policy allocates a cache line for either a read or write that misses in the cache.

Replacement policy

Round-robin

Pseudo-random

Least Recently Used (LRU)

Write policy

Write-through ==> Cache & main memory are coherent.

Write back ==> writes are performed only to the cache, and not to main memory.

Interesting Cache Issues during DMA Operation:-

https://lwn.net/Articles/2265/

https://lwn.net/Articles/2266/

No comments:

Post a Comment